Andrew Cater: 20231123 - UEFI install on a Raspberry Pi 4 - step by step instructions to a modified d-i

Motivation

Andy (RattusRattus) and I have been formalising instructions for using Pete Batard's version of Tianocore (and therefore UEFI booting) for the Raspberry Pi 4 together with a Debian arm64 netinst to make a modified Debian installer on a USB stick which "just works" for a Raspberry Pi 4.

Thanks also to Steve McIntyre for initial notes that got this working for us and also to Emmanuele Rocca for putting up some useful instructions for copying.

Recipe

Plug in a USB stick - use dmesg or your favourite method to see how it is identified.

Make a couple of mount points under /mnt - /mnt/data and /mnt/cdrom

1. Grab a USB stick, Partition using MBR. Make a single VFAT

partition, type 0xEF (i.e. EFI System Partition)

For a USB stick (identified as sdX) below:

$ sudo parted --script /dev/sdX mklabel msdos $ sudo parted --script /dev/sdX mkpart primary fat32 0% 100% $ sudo mkfs.vfat /dev/sdX1

$ sudo mount /dev/sdX1 /mnt/data/

Download an arm64 netinst.iso

https://cdimage.debian.org/debian-cd/current/arm64/iso-cd/debian-12.2.0-arm64-netinst.iso

2. Copy the complete contents of partition *1* from a Debian arm64

installer image into the filesystem (partition 1 is the installer

stuff itself) on the USB stick, in /

$ sudo kpartx -v -a debian-12.2.0-arm64-netinst.iso # Mount the first partition on the ISO and copy its contents to the stick $ sudo mount /dev/mapper/loop0p1 /mnt/cdrom/ $ sudo rsync -av /mnt/cdrom/ /mnt/data/ $ sudo umount /mnt/cdrom

3. Copy the complete contents of partition *2* from that Debian arm64

installer image into that filesystem (partition 2 is the ESP) on

the USB stick, in /

# Same story with the second partition on the ISO

$ sudo mount /dev/mapper/loop0p2 /mnt/cdrom/

$ sudo rsync -av /mnt/cdrom/ /mnt/data/ $ sudo umount /mnt/cdrom

$ sudo kpartx -d debian-testing-amd64-netinst.iso $ sudo umount /mnt/data

4. Grab the rpi edk2 build from https://github.com/pftf/RPi4/releases

(I used 1.35) and extract it. I copied the files there into *2*

places for now on the USB stick:

/ (so the Pi will boot using it)

/rpi4 (so we can find the files again later)

5. Add the preseed.cfg file (attached) into *both* of the two initrd

files on the USB stick

- /install.a64/initrd.gz and

- /install.a64/gtk/initrd.gz

cpio is an awful tool to use :-(. In each case:

$ cp /path/to/initrd.gz .

$ gunzip initrd.gz

$ echo preseed.cfg cpio -H newc -o -A -F initrd

$ gzip -9v initrd

$ cp initrd.gz /path/to/initrd.gz

If you look at the preseed file, it will do a few things:

- Use an early_command to unmount /media (to work around Debian bug

#1051964)

- Register a late_command call for /cdrom/finish-rpi (the next

file - see below) to run at the end of the installation.

- Force grub installation also to the EFI removable media path,

needed as the rpi doesn't store EFI boot variables.

- Stop the installer asking for firmware from removable media (as

the rpi4 will ask for broadcom bluetooth fw that we can't

ship. Can be ignored safely.)

6. Copy the finish-rpi script (attached) into / on the USB stick. It

will be run at the end of the installation, triggered via the

preseed. It does a couple of things:

- Copy the edk2 firmware files into the ESP on the system that's

just been installer

- Remove shim-signed from the installed systems, as there's a bug

that causes it to fail on rpi4. I need to dig into this to see

what the issue is.

That's it! Run the installer as normal, all should Just Work (TM).

BlueTooth didn't quite work : raspberrypi-firmware didn't install until adding a symlink for boot/efi to /boot/firmware

20231127 - This may not be necessary because raspberrypi-firmware path has been fixed

Preseed.cfg

# The preseed file itself causes a problem - the installer medium is

# left mounted on /medis so things break in cdrom-detect. Let's see if

# we can fix that!

d-i preseed/early_command string umount /media true

# Run our command to do rpi setup before reboot

d-i preseed/late_command string /cdrom/finish-rpi

# Force grub installation to the RM path

grub-efi-arm64 grub2/force_efi_extra_removable boolean true

# Don't prompt for missing firmware from removable media,

# e.g. broadcom bluetooth on the rpi.

d-i hw-detect/load_firmware boolean false

Finish.rpi

!/bin/sh

set -x

grep -q -a RPI4 /sys/firmware/acpi/tables/CSRT

if [ $? -ne 0 ]; then

echo "Not running on a Pi 4, exit!"

exit 0

fi

# Copy the rpi4 firmware binaries onto the installed system.

# Assumes the installer media is mounted on /cdrom.

cp -vr /cdrom/rpi4/. /target/boot/efi/.

# shim-signed doesn't seem happy on rpi4, so remove it

mount --bind /sys /target/sys

mount --bind /proc /target/proc

mount --bind /dev /target/dev

in-target apt-get remove --purge --autoremove -y shim-signed

!/bin/sh

set -x

grep -q -a RPI4 /sys/firmware/acpi/tables/CSRT

if [ $? -ne 0 ]; then

echo "Not running on a Pi 4, exit!"

exit 0

fi

# Copy the rpi4 firmware binaries onto the installed system.

# Assumes the installer media is mounted on /cdrom.

cp -vr /cdrom/rpi4/. /target/boot/efi/.

# shim-signed doesn't seem happy on rpi4, so remove it

mount --bind /sys /target/sys

mount --bind /proc /target/proc

mount --bind /dev /target/dev

in-target apt-get remove --purge --autoremove -y shim-signed

Tomorrow is going to be a new first day in a new job for me: I am joining the

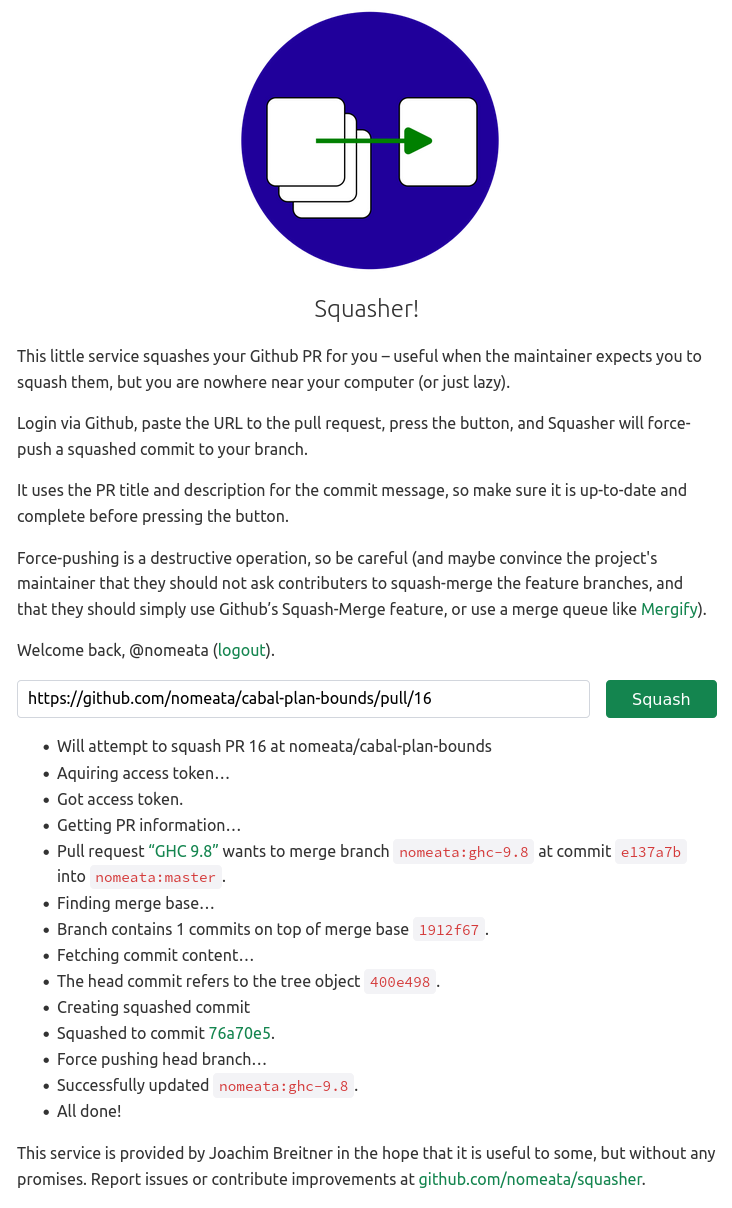

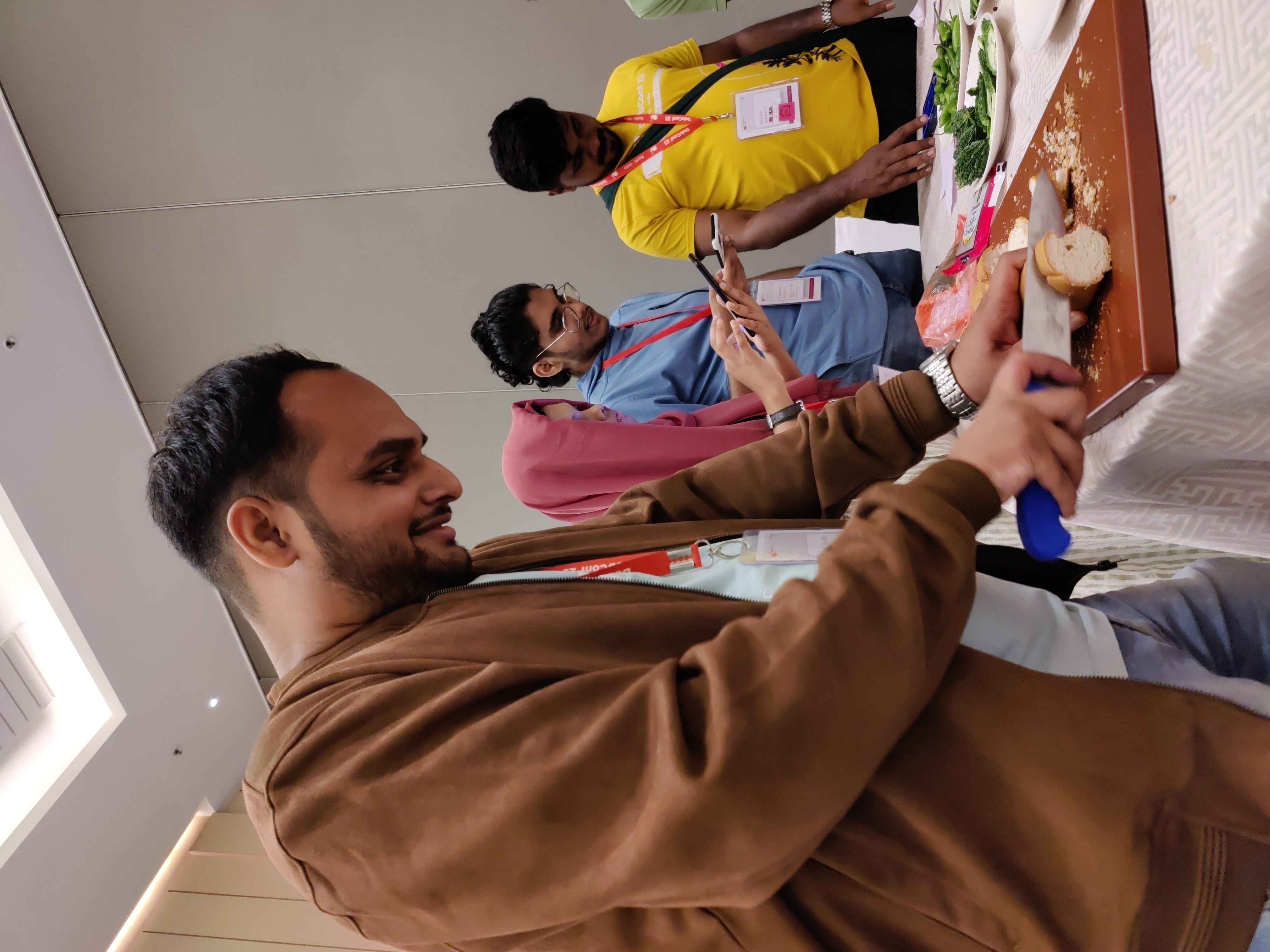

Tomorrow is going to be a new first day in a new job for me: I am joining the  Squasher in action

Squasher in action

My stall. Photo credits: Tejaswini.

My stall. Photo credits: Tejaswini.

Snacks and tea at the front desk. CC-BY-SA 4.0 by Ravi Dwivedi.

Snacks and tea at the front desk. CC-BY-SA 4.0 by Ravi Dwivedi.

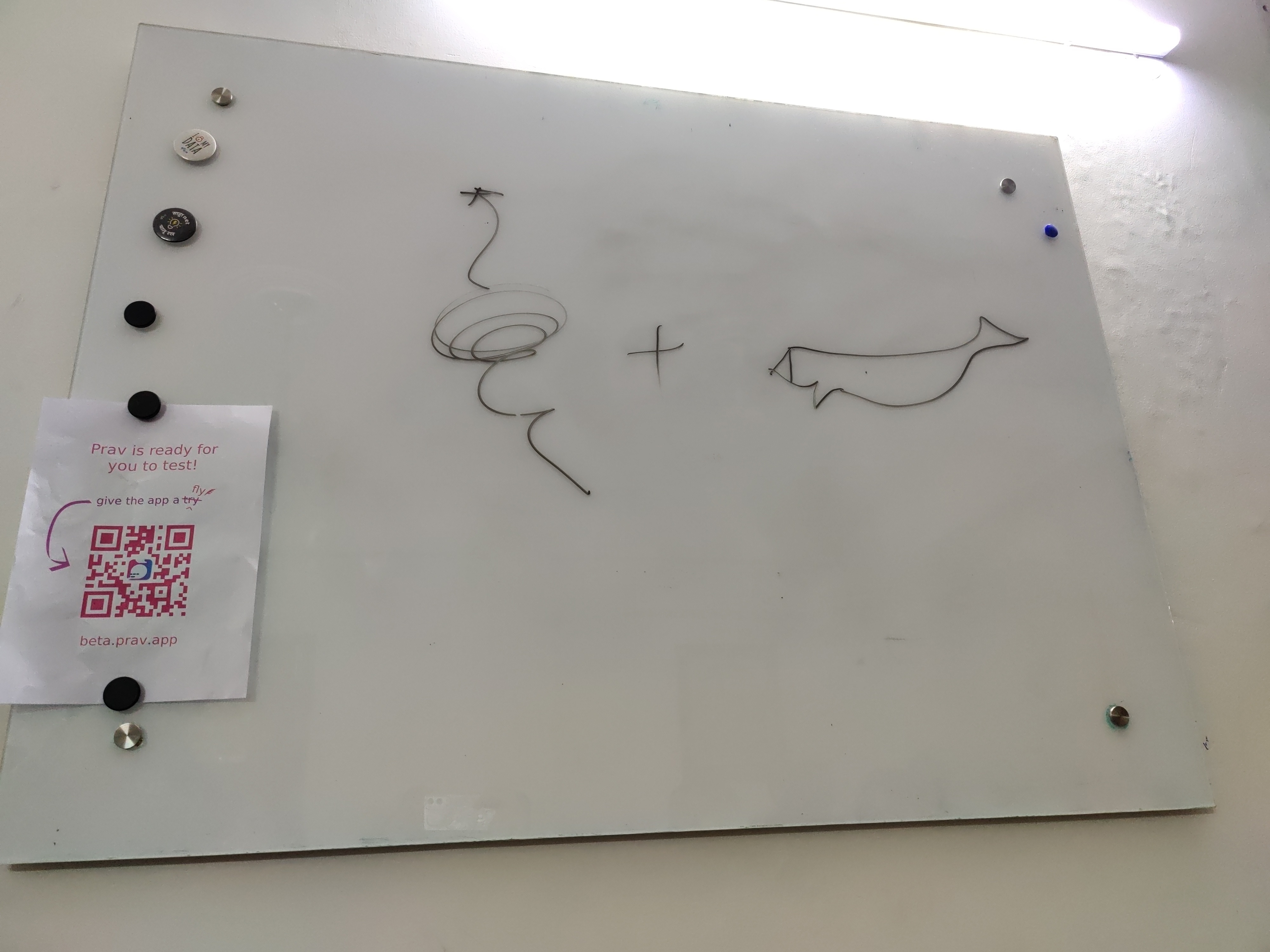

Pictionary drawing nowhere close to the intended word Wireguard :), which was guessed. Photo by Ravi Dwivedi, CC-BY-SA 4.0.

Pictionary drawing nowhere close to the intended word Wireguard :), which was guessed. Photo by Ravi Dwivedi, CC-BY-SA 4.0.

Group photo. Photo credits: Tejaswini.

Group photo. Photo credits: Tejaswini.

Tasty cake. CC-BY-SA 4.0 by Ravi Dwivedi.

Tasty cake. CC-BY-SA 4.0 by Ravi Dwivedi.

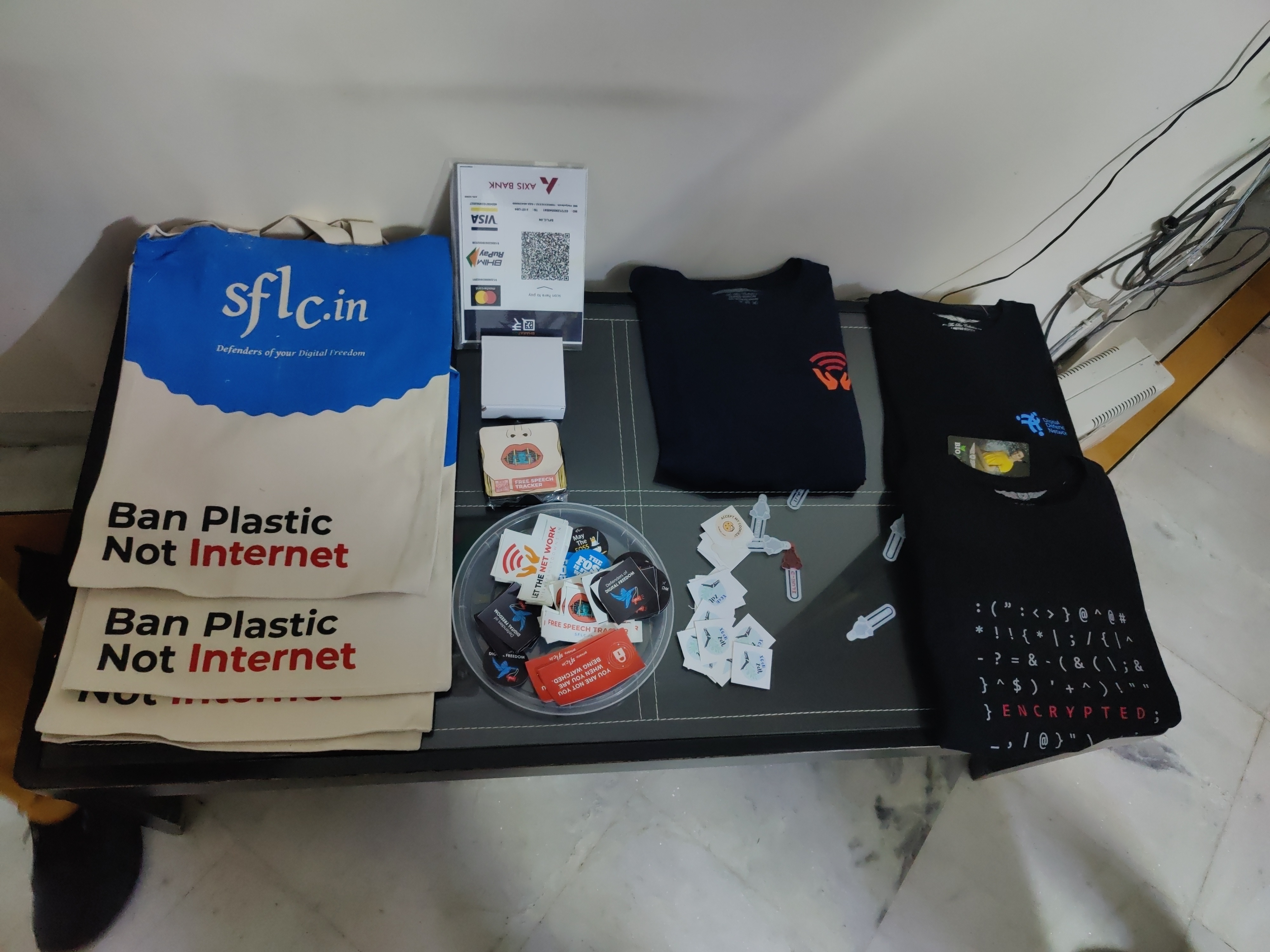

Merchandise by sflc.in. CC-BY-SA 4.0 by Ravi Dwivedi.

Merchandise by sflc.in. CC-BY-SA 4.0 by Ravi Dwivedi.

My previously mentioned

My previously mentioned

Physical keyboard found.

Physical keyboard found.

Official logo of DebConf23

Official logo of DebConf23

Suresh and me celebrating Onam in Kochi.

Suresh and me celebrating Onam in Kochi.

Four Points Hotel by Sheraton was the venue of DebConf23. Photo credits: Bilal

Four Points Hotel by Sheraton was the venue of DebConf23. Photo credits: Bilal

Photo of the pool. Photo credits: Andreas Tille.

Photo of the pool. Photo credits: Andreas Tille.

View from the hotel window.

View from the hotel window.

This place served as lunch and dinner place and later as hacklab during debconf. Photo credits: Bilal

This place served as lunch and dinner place and later as hacklab during debconf. Photo credits: Bilal

Picture of the awesome swag bag given at DebConf23. Photo credits: Ravi Dwivedi

Picture of the awesome swag bag given at DebConf23. Photo credits: Ravi Dwivedi

My presentation photo. Photo credits: Valessio

My presentation photo. Photo credits: Valessio

Selfie with Anisa and Kristi. Photo credits: Anisa.

Selfie with Anisa and Kristi. Photo credits: Anisa.

Me helping with the Cheese and Wine Party.

Me helping with the Cheese and Wine Party.

This picture was taken when there were few people in my room for the party.

This picture was taken when there were few people in my room for the party.

Sadhya Thali: A vegetarian meal served on banana leaf. Payasam and rasam were especially yummy! Photo credits: Ravi Dwivedi.

Sadhya Thali: A vegetarian meal served on banana leaf. Payasam and rasam were especially yummy! Photo credits: Ravi Dwivedi.

Sadhya thali being served at debconf23. Photo credits: Bilal

Sadhya thali being served at debconf23. Photo credits: Bilal

Group photo of our daytrip. Photo credits: Radhika Jhalani

Group photo of our daytrip. Photo credits: Radhika Jhalani

A selfie in memory of Abraham.

A selfie in memory of Abraham.

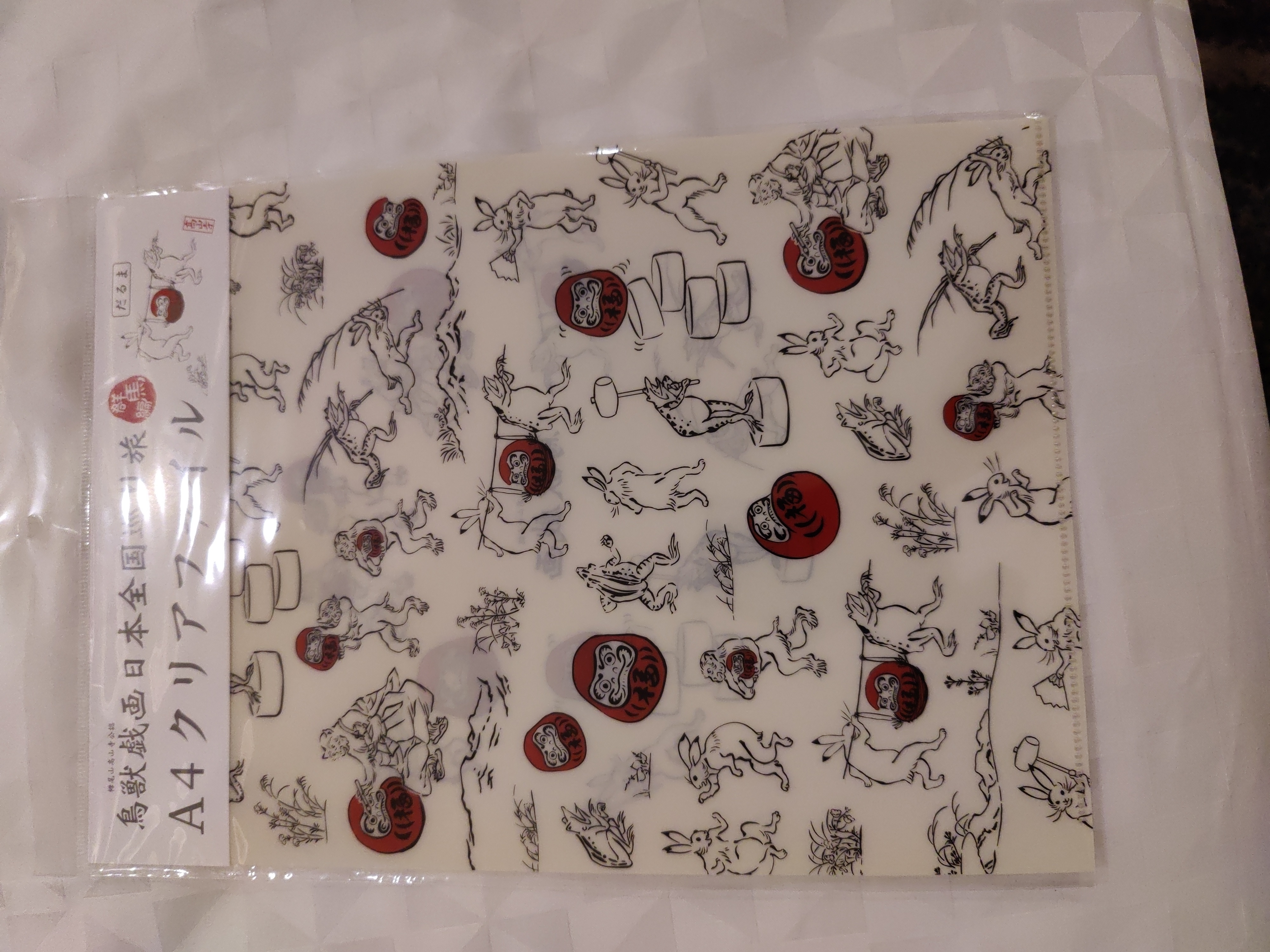

Thanks to Niibe Yutaka (the person towards your right hand) from Japan (FSIJ), who gave me a wonderful Japanese gift during debconf23: A folder to keep pages with ancient Japanese manga characters printed on it. I realized I immediately needed that :)

Thanks to Niibe Yutaka (the person towards your right hand) from Japan (FSIJ), who gave me a wonderful Japanese gift during debconf23: A folder to keep pages with ancient Japanese manga characters printed on it. I realized I immediately needed that :)

This is the Japanese gift I received.

This is the Japanese gift I received.

Bits from the DPL. Photo credits: Bilal

Bits from the DPL. Photo credits: Bilal

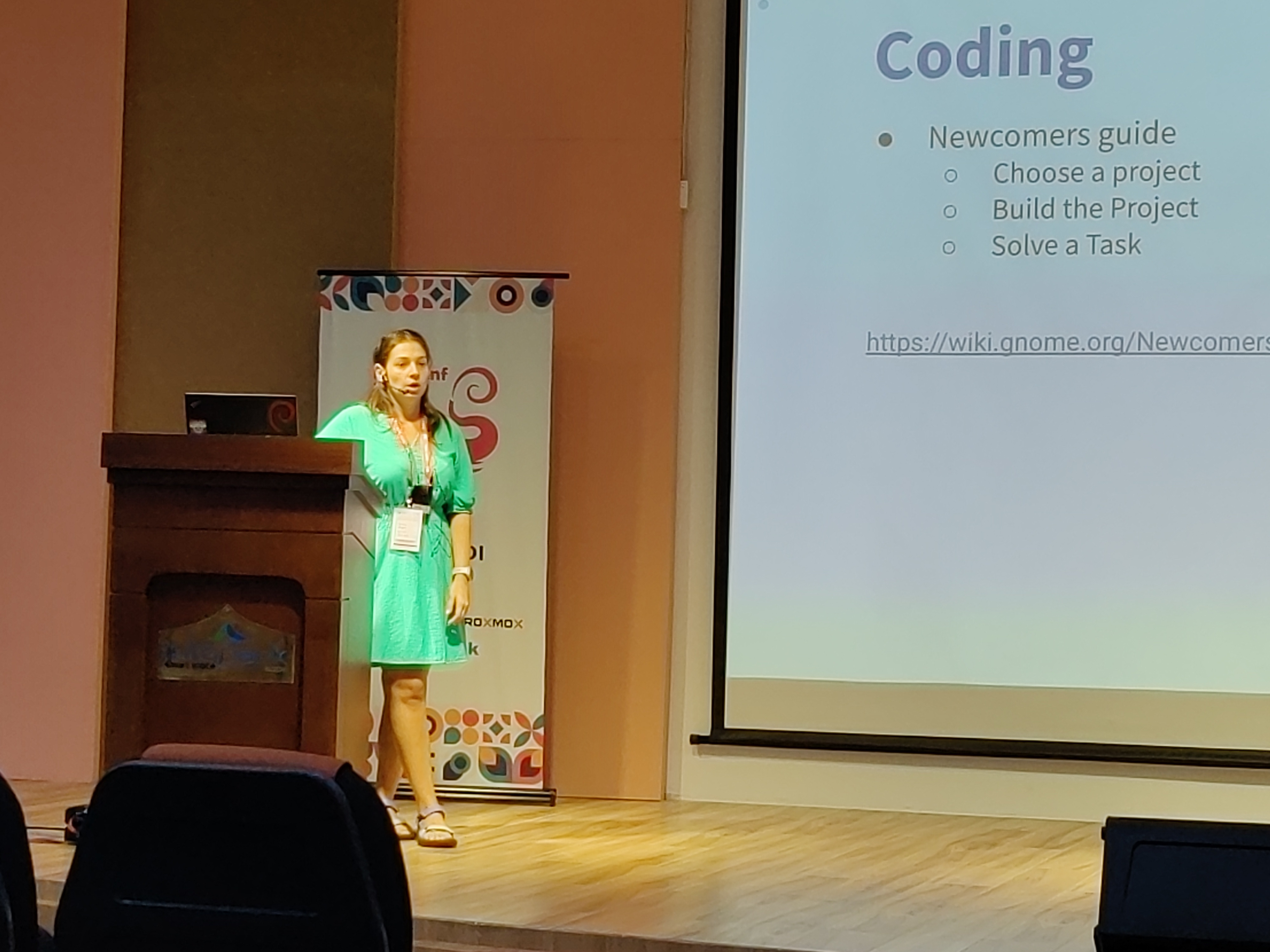

Kristi on GNOME community. Photo credits: Ravi Dwivedi.

Kristi on GNOME community. Photo credits: Ravi Dwivedi.

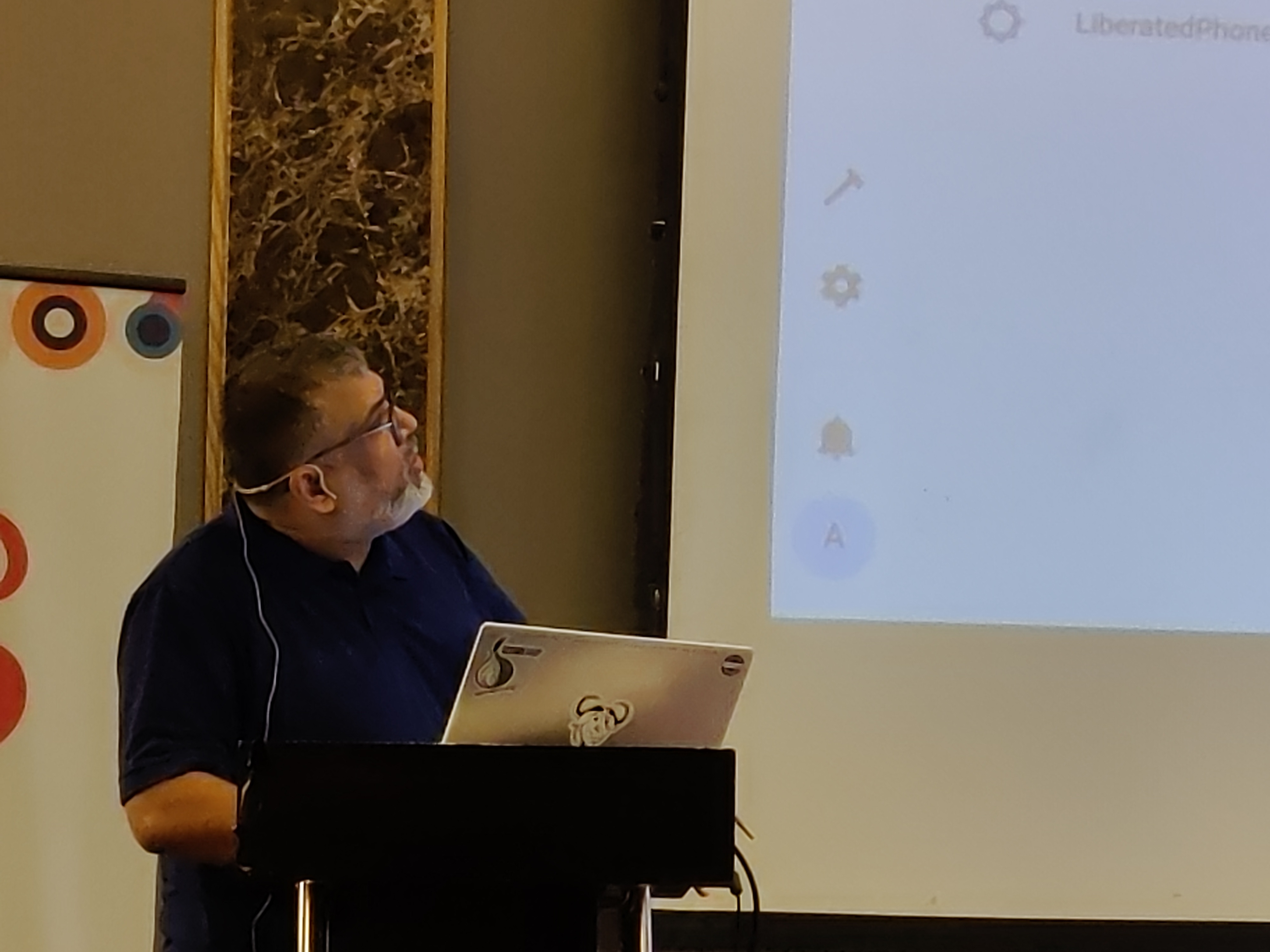

Abhas' talk on home automation. Photo credits: Ravi Dwivedi.

Abhas' talk on home automation. Photo credits: Ravi Dwivedi.

I was roaming around with a QR code on my T-shirt for downloading Prav.

I was roaming around with a QR code on my T-shirt for downloading Prav.

Me in mundu. Picture credits: Abhijith PA

Me in mundu. Picture credits: Abhijith PA

From left: Nilesh, Saswata, me, Sahil. Photo credits: Sahil.

From left: Nilesh, Saswata, me, Sahil. Photo credits: Sahil.

Ruchika (taking the selfie) and from left to right: Yash,

Ruchika (taking the selfie) and from left to right: Yash,  Joost and me going to Delhi. Photo credits: Ravi.

Joost and me going to Delhi. Photo credits: Ravi.

This post describes how to handle files that are used as assets by jobs and pipelines defined on a common gitlab-ci

repository when we include those definitions from a different project.

This post describes how to handle files that are used as assets by jobs and pipelines defined on a common gitlab-ci

repository when we include those definitions from a different project.

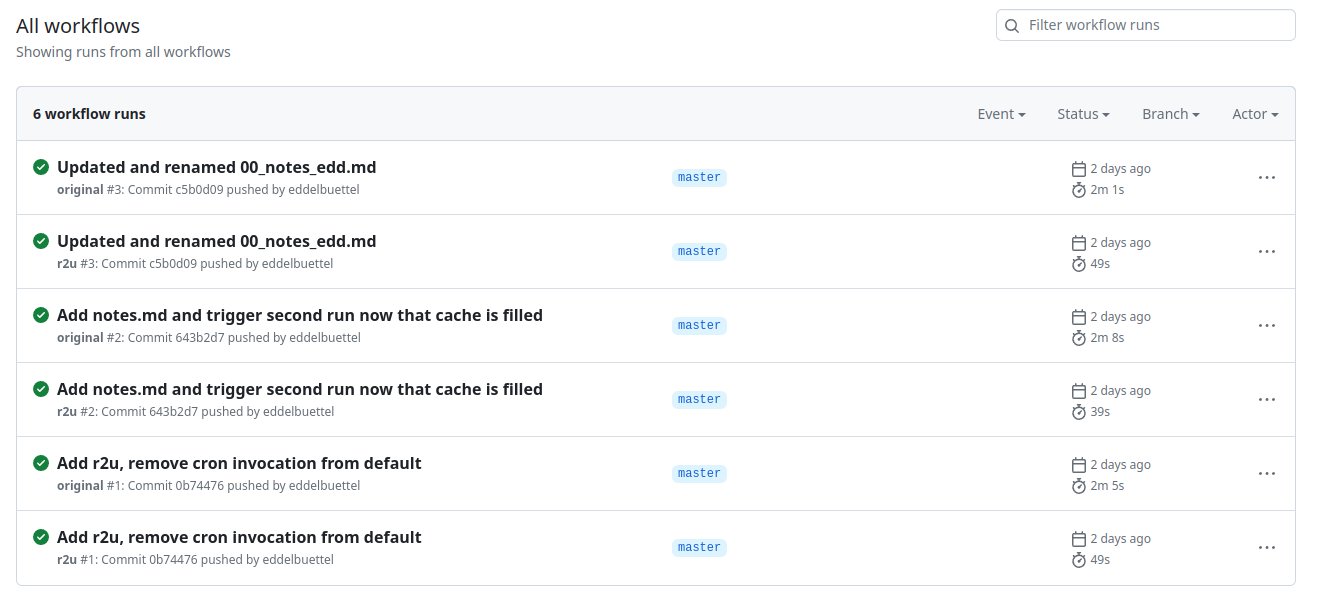

Edited 2023-08-25 01:32 BST to correct a slip.

Edited 2023-08-25 01:32 BST to correct a slip. Welcome to the 43th post in the

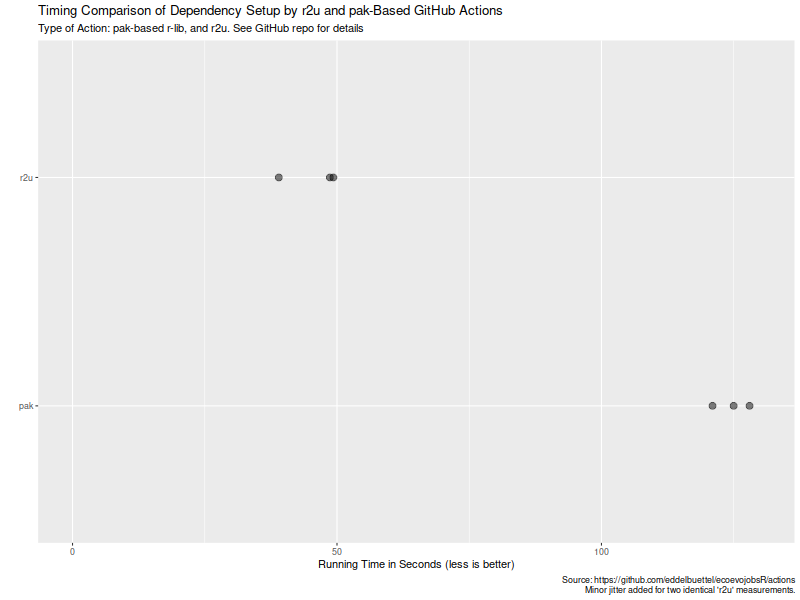

Welcome to the 43th post in the  Turns out maybe not so much (yet ?). As the

Turns out maybe not so much (yet ?). As the  Now, this is of course entirely possibly that not all possible venues

for speedups were exploited in how the action setup was setup. If so,

please file an issue at the

Now, this is of course entirely possibly that not all possible venues

for speedups were exploited in how the action setup was setup. If so,

please file an issue at the